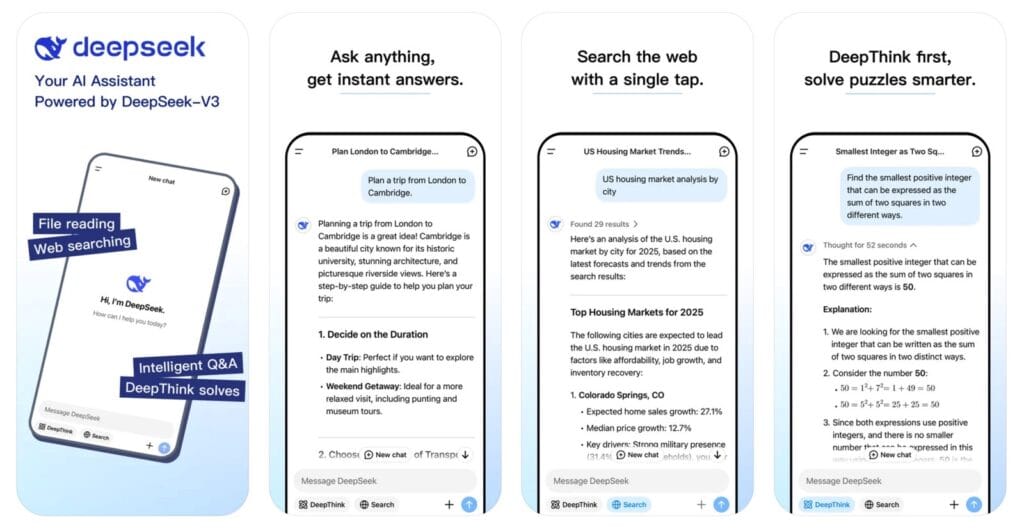

DeepSeek quickly rose to the top of the App Store these days, becoming the most downloaded iPhone app and dethroning ChatGPT. That’s not a surprise if you’ve followed the genAI space for the past couple of years. AI apps can go viral, and the Chinese app did that after news spread that DeepSeek R1 is a reasoning AI as good as ChatGPT o1 and costs only a fraction to train.

The same news tanked the US market, as investors panic-sold hundreds of billions worth of stock on Monday, fearing that AI hardware from NVIDIA and others would not be as important to developing good AI. Those fears were largely exaggerated, and the market will recover.

With all that in mind, I can’t blame you if you want to try DeepSeek and see what it’s all about. However, I’ll remind you again of the two big reasons why you should avoid the Chinese AI software.

First, all your data goes to China, and there’s no telling what happens with it, despite the seemingly solid privacy policy DeepSeek put in place.

Tech. Entertainment. Science. Your inbox.

Sign up for the most interesting tech & entertainment news out there.

By signing up, I agree to the Terms of Use and have reviewed the Privacy Notice.

Second, the DeepSeek training instructions include plenty of censorship, which happens in real-time.

There is one way to try DeepSeek safely and without the censorship built-in instructions in place, but that involves installing DeepSeek on your PC. However, running DeepSeek locally on your Windows, Mac, or Linux computer is incredibly easy and will cost you nothing.

You don’t have to steal or illegally download any DeepSeek files to do it. In addition to coming up with clever ways to overcome the US sanctions on AI hardware and train a powerful AI, DeepSeek also thought of another brilliant move: It made DeepSeek open-source, which means it’s available for free.

You can download the AI models on your computer, run them locally without an internet connection, and test DeepSeek AI all you want. This is easily the best avenue for developers, AI researchers, and DeepSeek rivals to try out DeepSeek’s various rivals.

It might seem daunting for more inexperienced users who would prefer the iPhone, Android, or web experiences. But, as BeeBom explains, having the DeepSeek R1 models run on your computer is very easy.

You need to install either LM Studio or Ollama on your computer to get started. Both apps are free, so you don’t have to pay anything.

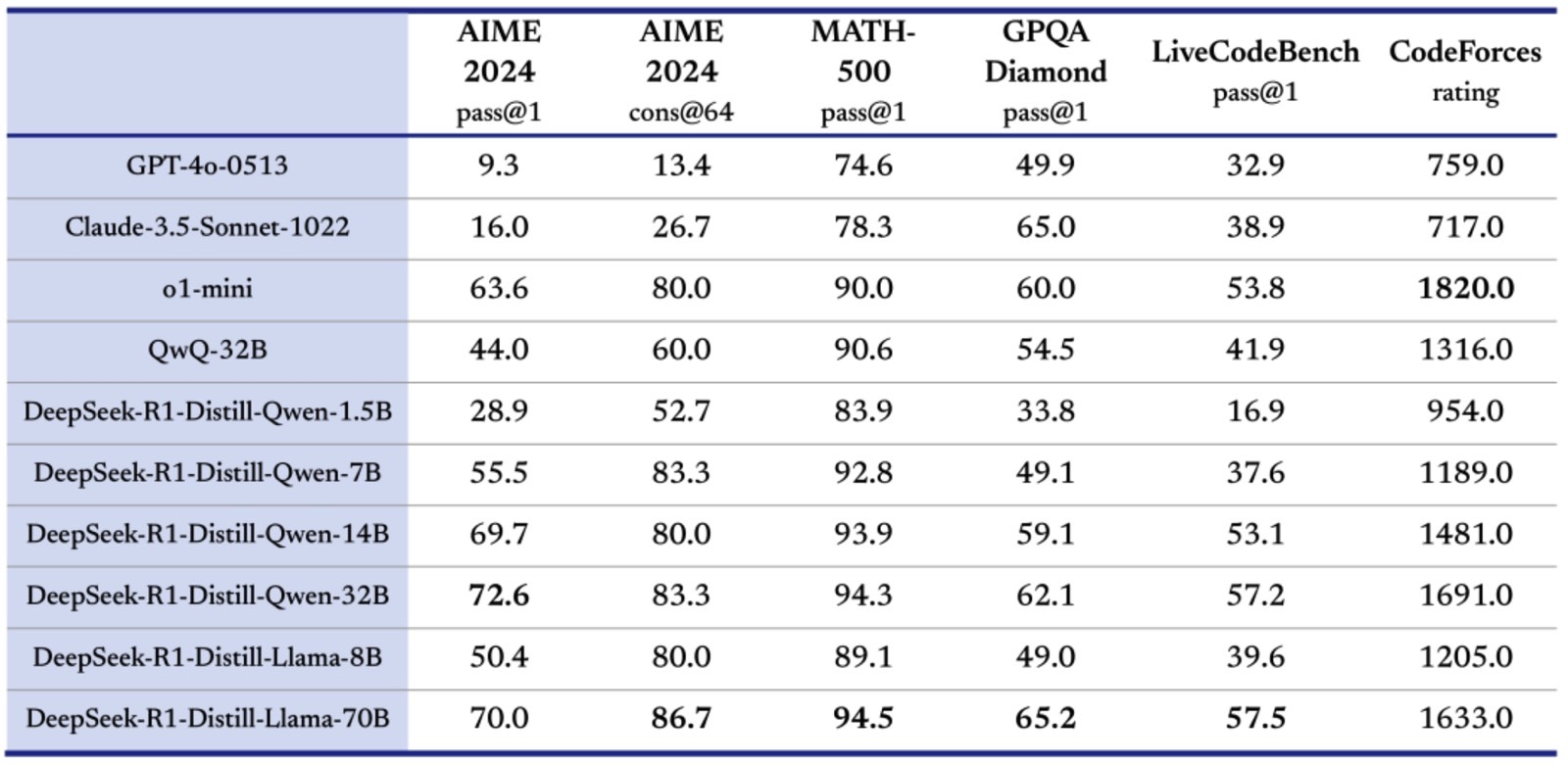

The various DeepSeek R1 distillations and their performance. Image source: DeepSeek

The various DeepSeek R1 distillations and their performance. Image source: DeepSeek

Next, you need to choose a DeepSeek R1 model from the various options available. Running the full R1 model might be impossible without some serious hardware, but DeepSeek has distilled various R1 versions for you to try.

The LM Studio app lets you search for a distilled DeepSeek R1 model like DeepSeek R1 Distill (Qwen 7B). This one requires 5GB of storage and 8GB of RAM to run. Most modern computers should have no problem doing it.

Once you install DeepSeek R1 via LM Studio, you can start chatting with the AI from within the app.

Ollama doesn’t look as good as LM Studio, so you must run the DeepSeek R1 in Command Prompt on Windows PCs or Terminal on Mac. But the good news is that Ollama supports an even smaller DeepSeek R1 distillation (1.5B parameters), which uses just 1.1GB of RAM. This could be good for systems with fewer hardware resources.

Then again, if memory isn’t a problem, you can run a better DeepSeek R1 distilled model. You can choose from 7 billion parameters to 70 billion. But the RAM requirements will increase. The 7B DeepSeek model will need about 5GB of memory.

The table above, which DeepSeek shared on its blog, lists the various DeepSeek R1 distillations and their respective performances in multiple tests.

For visual aid on running DeepSeek on your PC, check out the Beebom guide, which has plenty of screenshots that should come in handy.