When it demoed Project Astra at I/O 2024 in May, Google teased smart glasses for the first time. The company did the same thing during Wednesday’s big Gemini 2.0 announcement, where the wearable was part of a longer Project Astra demo. Google also suggested Gemini AI smart glasses might be coming soon.

At the time, I thought Google was simply showing off a prototype of a pair of Samsung XR glasses that would be unveiled next month during the Galaxy S25 Unpacked press event. Little did I know that Samsung and Google had bigger things in mind.

A day later, the two companies unveiled Project Moohan, a Vision Pro spatial computer rival. Google also announced the Android XR platform that will power it. Both Samsung and Google mentioned smart glasses in their announcements. But Google’s was more impressive, as the company showed off AR features for smart glasses powered by Gemini AI.

Google didn’t offer a name for the smart glasses or a release date. But the company did give a select few people a hands-on experience with Project Moohan and the unnamed smart glasses. It turns out the Gemini AI wearable is quite interesting, seemingly delivering the Google Glass experience that Google failed to offer more than a decade ago.

Tech. Entertainment. Science. Your inbox.

Sign up for the most interesting tech & entertainment news out there.

By signing up, I agree to the Terms of Use and have reviewed the Privacy Notice.

We all remember the Google Glass project, which was, in retrospect, ahead of its time. That smart glasses concept sparked privacy worries at a time when Google wasn’t exactly known for great privacy. Also, there was no generative AI at the time to truly make Google Glass useful.

Fast-forward to late 2024, and Google seems confident enough to demo a product that could become a must-have accessory for people who want AI assistance all the time. Wired tested Google’s Gemini AI glasses and Project Moohan, finding the former the more compelling product.

The glasses seem to take inspiration from the North Focals, smart glasses from a company that Google purchased a few years ago. But they’re slimmer and more comfortable than the Focals.

Even so, smart glasses have thick arms and thicker rims around the eyes, which is what you’d expect from glasses that incorporate AR abilities. They feature clear or sunglasses lenses and will support prescription lenses like Moohan.

North Focals glasses. Image source: North

North Focals glasses. Image source: North

When it comes to AR capabilities, the glasses come in three versions. The no-AR model is presumably the cheapest, as it lacks a display. Then there’s a pair that projects images on one of the lenses, the monocular display.

The best experience, and probably the most expensive, comes from the binocular display version that will center AR images, as seen in the photos above and below.

The processing is passed on to a nearby smartphone, presumably a Pixel phone that connects wirelessly to the glasses. That’s where Gemini resides, and the AI is ready to help with a tap on the arm of the glasses. A tap on the side also brings up the display in those models that support AR.

![]() Google Pixel 9 Pro Fold: Gemini support. Image source: Christian de Looper for BGR

Google Pixel 9 Pro Fold: Gemini support. Image source: Christian de Looper for BGR

The camera is also built into the frame, and an LED turns on when it is active. Built-in microphones pick up your commands for Gemini, and a speaker in the frames lets you hear the AI talk back.

The glasses are meant to last a full day on a charge, though battery life will probably depend on how much you use them. The hands-on experience with the Gemini AI smart glasses doesn’t offer actual battery characteristics, and it’s too early for that.

The report explains the various scenarios where AI smart glasses will be useful. For example, the glasses support Google Maps navigation, a feature Google showed in the Android XR platform announcement (image below).

Google Maps AR navigation on smart glasses. Image source: Google

Google Maps AR navigation on smart glasses. Image source: Google

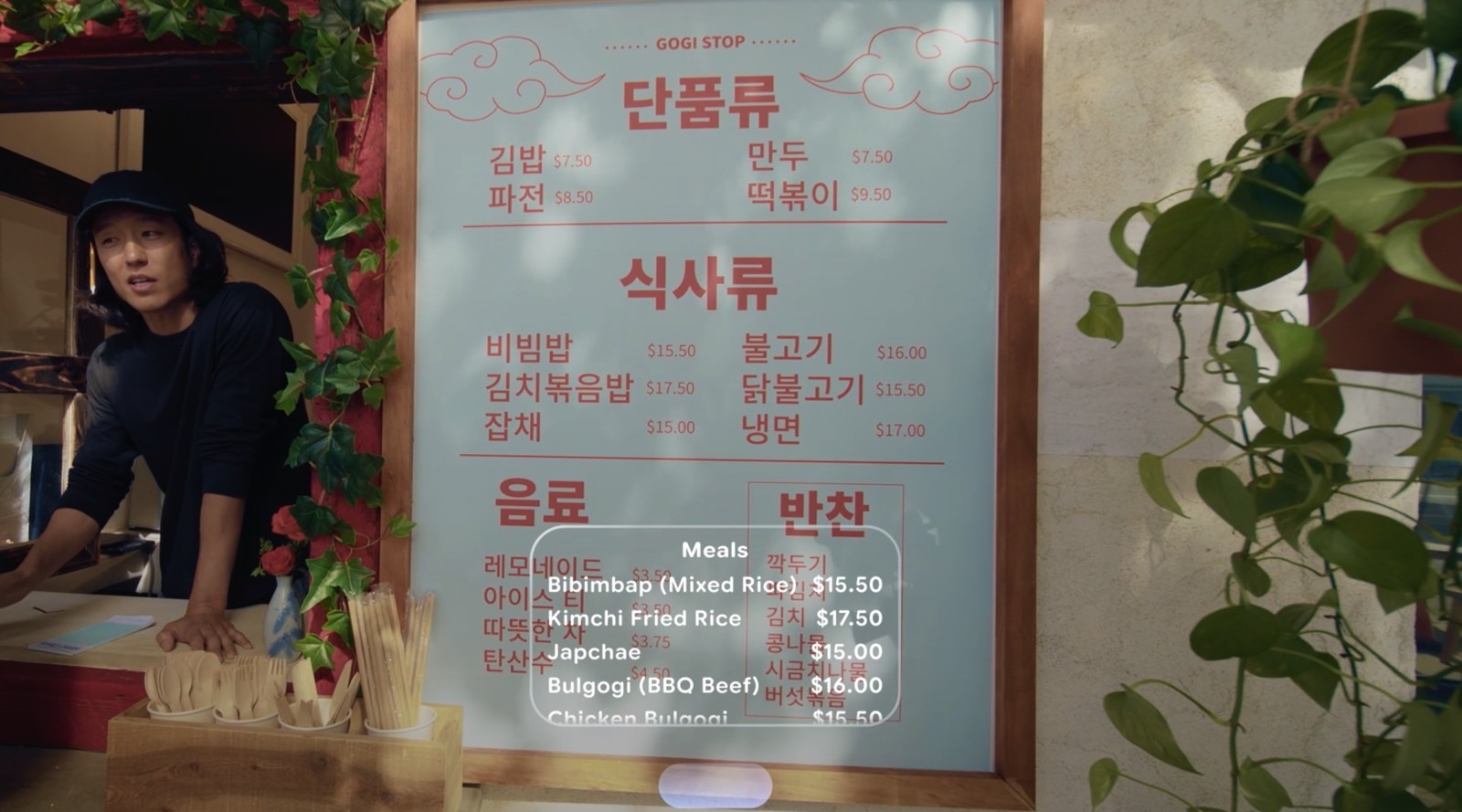

You also get AI summaries of notifications displayed in front of your eyes. The same goes for real-time translation of text. Impressively, the glasses can translate spoken foreign languages in real-time. Gemini will also caption conversations, a great feature for people with hearing issues. And Gemini can answer in multiple languages, a feature ChatGPT’s Advanced Voice Mode also supports.

The AR capabilities do not stop there. The glasses will show you previews of photos you take, and you’ll be able to play video when needed. The display experience isn’t the greatest, which is understandable. But it still sounds like the hands-on demo was a success.

Gemini powers all the smart AI features, like real-time translation and captioning. The AI can also summarize content seen while on the go, like the page of a book. Gemini has a short-term memory, too, so it can recall some of the things you’ve just done and seen a few minutes ago.

Real-time translation on Android XR smart glasses.

Real-time translation on Android XR smart glasses.

Interestingly, Gemini can also identify products and offer instructions on how to use them. In this hands-on, the Wired reporter asked how to use a Nespresso machine.

As impressive as the demo might sound, Google wasn’t ready to provide a release date and pricing information. The Gemini AI smart glasses it unveiled are clearly superior to the Meta Ray-Ban smart glasses, which only offer AI support, with the AR component missing. I’d expect Google’s glasses to be more expensive, assuming Google wants to sell them.

Since Project Astra is still in the early days, I’d expect Google to launch the glasses once the Gemini assistant abilities it’s working on for Project Astra are ready to launch. No point in having smart glasses in store if the Gemini software isn’t ready.

Meanwhile, you should check out Wired’s full hands-on experience for more details.