Seven decades ago, researchers started studying the potential of artificial intelligence, reimagining the relationship between humans and machines. Just over one year ago, generative AI was introduced to the public, bringing the question of whether machines could think into sharper focus.

With AI at our fingertips, now is the time to move forward together to manage its dramatic impact on the world.

Assessing transformative technologies and their global impact has long been a focus at the International Telecommunication Union (ITU), the UN Agency for Digital Technologies. But a lot has changed since we brought the AI community together for the first AI for Good Global Summit in 2017.

AI has propelled big business decisions as companies race new technologies to market. In recent earnings calls, some big tech companies announced plans[1] to add billions of dollars in new AI investments.

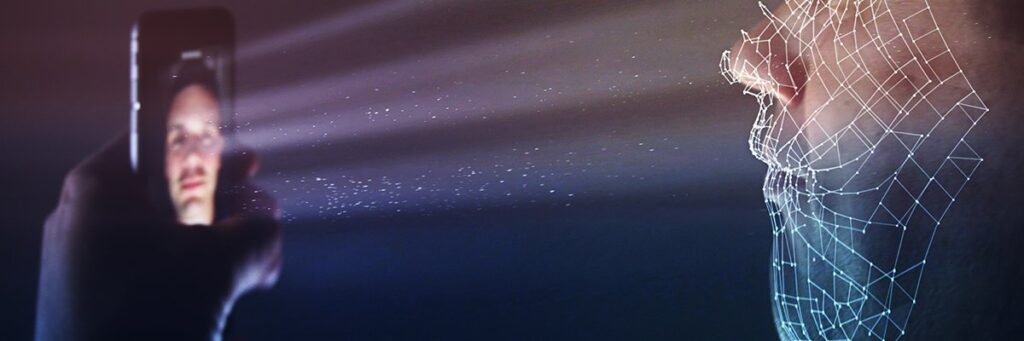

At the same time, the spread of misinformation, rise of AI-generated deepfakes and persistence of biases is eroding public trust. Climate impacts are also cause for concern.

Even more troubling is that AI is out of reach for the one-third of the world’s population that is still not connected to the Internet.

These are just some of the challenges posed by AI, and there is growing pressure for governments to take action.

From China to India to the United States, governments have stepped up to consider how to start regulating AI. The new EU AI Act is about to enter into force. In addition to the G7 Hiroshima AI Process, countries have organized a number of AI-focused conferences, including last year’s UK Safety Summit and the Seoul Safety Summit.

This fast regulatory response shows that governments are motivated to build a safe AI future. But there is the threat that many countries may be left behind, a challenge that we cannot and will not overlook.

For three days in May, ITU and its partners—including more than 40 UN organizations and the Swiss government—welcomes leaders from all sectors of the AI community for the AI for Good Global Summit.

AI for Good is an important part of the global response that brings the UN System and stakeholders together on AI to help align the international approach in accordance with UN values.

Much work is already underway within the UN to leverage its experience and networks for facilitating consensus building, capacity development, and international cooperation to lessen risks and address AI-related challenges. Guidance expected from the UN Secretary-General’s High-Level Advisory Body on AI will help in further refining these efforts.

Already 70 ministers and regulators, almost half of which are from developing countries, have committed to participate with AI for Good, which will showcase how AI can benefit society by mitigating climate change, transforming education, fighting hunger, and rescuing all of the UN Sustainable Development Goals.

This year we’ve set aside a day to just focus on how AI governance principles can be implemented globally. This is what governments have told us is important as regulatory regimes struggle to keep up with the pace of innovation, especially in the Global South.

I believe our efforts must center on three core principles.

First, global rulemaking processes need to be inclusive and equitable.

AI governance efforts must account for the needs of all countries, not just the technologically advanced nations that are undertaking regulatory actions now. For how can the 2.6 billion people who are currently offline around the world benefit from the AI revolution if they are not even part of the broader digital revolution?

Second, AI needs to be safe, secure, free from bias and respectful of our privacy. We also need to ensure transparency and accountability from those that provide AI systems and solutions.

Third, we must strike the right balance between risks and benefits to ensure responsible development, deployment and use of AI.

This is where instruments such as international technical standards can make a difference. And this is where we can add the necessary levels of protection against misuse and deception.

ITU is a key part of the international standard-setting system that provides guidelines for the development of technology, both enhancing tech’s predictability and allowing it all to work together. This also provides certainty in the market and eases innovation for both large and small industry players everywhere, including in developing countries.

Safety is a critical requirement of our standards right from the design phase. At AI for Good, for example, we will focus on watermarking as a standard that can help us verify whether online photos, audio and videos are real.

Built on these three principles, this year’s renewal of the AI for Good Global Summit comes at an important time. In an AI readiness survey being conducted by ITU, only 15% of the governments that have responded have confirmed they had an AI policy in place.

With the regulatory landscape still in its infancy, we have a chance to conduct the broad outreach necessary to ensure that every country is counted, regardless of its progress on AI.

Through our efforts, we need to move closer together to global agreement on how to ensure AI is truly used for as a force for good for everyone.

Doreen Bogdan-Martin is the Secretary-General of the International Telecommunication Union (ITU), the UN Agency for Digital Technologies.